Direct Synchronization, aka SyncML Server#

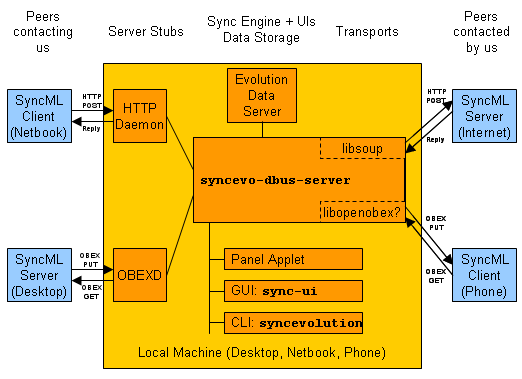

This is a very preliminary system architecture of direct device-to-device and device-to-desktop synchronization with SyncEvolution and the Synthesis SyncML Engine. So far, SyncEvolution only acts as the SyncML client. For direct synchronization, it also needs to act as the SyncML server. The Synthesis SyncML Engine supports both modes to a large extent. For the server role, some APIs and classes have to be added.

The goal of this article is to facilitate the discussion of this new feature before starting to implement it. Most likely the design is full of mistakes and gaps, so please tell us about them on the SyncEvolution mailing list. This document will be updated as the design evolves, so hopefully it’ll turn into a reference document of a working implementation at some point…

Usage Models#

SyncEvolution is expected to cover the full range of devices, from mobile devices to netbooks, laptops and desktops. In principle, all of these devices are capable of running SyncEvolution as SyncML server and as client, but in practice it is more likely that small, portable devices like a phone act as simple SyncML clients whereas more powerful devices implement the more challenging server role and thus do tasks like data comparison and merging. The key difference is that the server can have a more elaborate GUI to control the sync (manage devices, interactively merge data, …)

The system diagram distinguishes between peers, which are contacted by the local machine on behalf of its user and peers which contact us. With the HTTP binding of SyncML, the SyncML client contacts the server. With OBEX (Bluetooth, USB, IrDA), the roles are reversed. The user controls the SyncML server on the “smart” device (PC, netbook) and asks it to contact the “dumb” device (phone).

In all of these use cases, the data which is synchronized is part of the user’s environment. More specifically, in Moblin it is stored in the Evolution Data Server (EDS). If multiple users share the same machine, then the data is stored in their personal home directory. In contrast, a traditional SyncML server typically stores data of multiple different users in one large database. Implementing such a server is not the goal of this project. There are already several viable implementations of such a multi-user SyncML server, including the one from Synthesis itself.

Server Stubs + Interprocess Communication#

In this design, connection requests are handled by transport specific “server stubs”. They extract the SyncML messages, contact the sync engine with that data, and return the reply. The goal is to implement them in such a way that no SyncML message parsing is necessary inside the stubs. They run in their own processes. D-Bus is used as a communication mechanism between different processes running on the local machine. Such a design has the following advantages:

Server stubs can be developed, maintained, and installed separately from SyncEvolution itself.

The D-Bus API provided by the core sync engine can be small (start session, send/receive opaque messages).

A single entity controls all sync sessions and user interaction.

The syncevo-dbus-server can be started on demand. Together with starting the stubs on demand (inetd) or embedding them inside another process (obexd), this reduces the average memory footprint and system startup time.

The impact of crashes is minimized.

The dependency on D-Bus is already imposed by EDS in Moblin. The GUI heavily depends on it, too. Therefore using D-Bus instead of some other interprocess communication mechanism does not introduce new constraints. Systems without it are certainly feasible (see “Alternatives” section) and patches/maintainers for those are welcome if there is a demand.

Contexts#

In a simple setup, all of the processes run under the same user ID and have access to the same D-Bus session bus. ithout such a session bus, synchronization is not possible. It is possible to create a D-Bus session if the user is not already logged in or to connect to an existing session if the process was started without the right DBUS_SESSION_BUS_ADDRESS environment variable (background daemon, server stubs).

obexd runs as a normal user, with access to the D-Bus session bus. Therefore it fits into this architecture without further complications.

In a more complicated setup, the stubs run under a different user ID. They have to identify the user that a request is meant for and then need sufficient privileges to connect to that user’s D-Bus session. With HTTP, the user could be identified by parts of the URL to avoid parsing the SyncML message. Access to the D-Bus session could obtained if running as root. It would be easier to avoid such a setup in the first place, though.

Authentication#

Authentication is primarily the responsibility of the server stubs. Anyone who is able to contact the core engine is typically also able to access the data directly, therefore it makes more sense to harden the network facing parts of this design. They can implement authentication using existing mechanisms (HTTP AUTH, pairing in Bluetooth) or skip it altogether if desirable (local connections).

As a fallback, the core engine can also be told to verify that the credentials sent as part of the SyncML messages match the preconfigured ones or the ones of the local user.

Communication + Concurrency#

The SyncML protocol itself already provides flow control (because of its send/receive communication style), avoids overloading peers (maximum message size enforced by sending large objects in multiple messages), tolerates message and connection loss (resending messages without reply is possible), can suspend a running or interrupted session and resume it later. Connections can be kept alive by a server by sending empty messages during long-running operations. The Synthesis implementation supports all of this.

Currently, the GUI and syncevo-dbus-server support only one active sync session at a time. This is both a conceptual simplification and a limitation of the syncevo-dbus-server implementation. It is simpler because it avoids concurrent modifications of the same data (which could still happen with smaller probability if the user edits the data in parallel with a sync and thus should be handled) and reduces the complexity of the GUI. In syncevo-dbus-server, the sync session is executed by handing over control to an instance of the EvolutionSyncClient class. Control is returned to the main program temporarily in a callback whenever data needs to be sent or received, which is done via asynchronous libsoup message transfers (for HTTP). This runs inside the same glib event loop which also processes D-Bus communication.

Processing SyncML messages and exchanging data with EDS inside syncevo-dbus-server is allowed to block for a while (in the range of several seconds or more). While this processing takes place, D-Bus communication is not handled. The GUI needs to be more responsive, therefore it has to execute operations completely asynchronously.

Session and Config Handling#

To simplify message routing inside the server stubs and the upper layers of the syncevo-dbus-server, a session ID is created by the syncevo-dbus-server for each new connection request. All following messages must be sent to the server with that ID in the meta data (that is, outside of the SyncML message itself) to avoid redundant parsing of the message.

With HTTP, the URL encoded inside the server’s reply contains a ?session=

string. So, all following POSTs in that session will have that information in a

way that it is accessible to the HTTP daemon.

With OBEX, something similar is possible: the initial connect request assigns a connection ID which is then used for all related message exchanges. However, when the connection is lost the session cannot continue because a new connection request cannot be associated with the existing session.

Each synchronization session must have a local configuration. When starting the synchronization locally, as SyncML client, it defines remote sync URL (http(s):// for HTTP transport, something else for OBEX, TBD), local databases and the corresponding remote URI, credentials on that peer, etc.

When accepting a connection request, syncevo-dbus-server has to determine the corresponding configuration based on meta information (sync URL in HTTP) or use a default configuration. This configuration is shared by several peers. As part of the SyncML session startup, the peer gets identified. At that point the local databases can be initialized to synchronize with that peer. That deviates a bit from the current approach where the peer is known in advance, so changes in the internal SyncSource API will be necessary.

When a configuration is used for a SyncML server session, then username/password are the ones which have to be provided by the peer (if authentication is enabled and done via configured credentials, empty username/password indicates that the peer has to provide credentials for the local user account) and URIs are the keys which map to local databases.

For a server initiated sync (the normal use case with OBEX) we need two configurations: the server configuration and a configuration that defines the URIs on the peer. It is not easy to determine which URIs and data formats the peer supports. This problem is ignored for the time being.

Even if syncevo-dbus-server supported multiple concurrent session, only one session may be active per configuration, so the configuration name could be used as session ID. This has the advantage that listing active sessions is readily understandable by a human (“scheduleworld”, “my phone”) and the disadvantage that it leaks a small bit of information to the peer (the name of the local configuration). It is not hard to generate a random string and match that to the config, so this is the better approach.

Sequence Diagram + D-Bus API#

Here’s the sequence of events when accepting an OBEX connection request.

obexd: publish SyncML as Bluetooth service

third-party SyncML server: pair with Bluetooth stack, create connection to obexd

obexd: instantiate org.syncevolution.sync service, asynchronously call connect(config=””, session=0, must_authenticate=false) - default configuration, new session, peer is already authenticated

syncevo-dbus-server: look up default configuration (config=””) and accept the connection, returning a connection=org.syncevolution.connection instance and the corresponding session number

obexd: accept the OBEX connection, remember connection instance. Session number can be ignored because it cannot be used for reconnect requests.

SyncML server: PUT SyncML Notification message

obexd: gather complete data of all PUTs, call connection.process(data=byte array, mime type=string)

syncevo-dbus-server:

check type to distinguish between server initiated sync (“application/vnd.syncml.ds.notification”, supported) and SyncML client (“application/vnd.syncml+(wb)xml”, not supported yet)

instantiate EvolutionSyncClient based on parameters in the notification message, with transporthandle as transport agent

run the sync, let it produce the first SyncML message

return that message as result of connection.process(), then go idle

SyncML server: post a GET

bexd: wait for GET and SyncEvolution reply, then send the reply data in response to the GET

obexd+syncevo-dbus-server: process more PUT/GET exchanges in the same way until connection.process() flags final=true, indicating the end of the session, but with the connection handle still valid

obexd: close connection, call connection.close(success=true/false) indicating whether the connection was shut down properly

syncevo-dbus-server: treat the last message as sent, close down sync session, free connection

Alternative Setups and Implementation Remarks#

This section captures some random remarks about aspects that are not part of the design itself.

HTTP Daemon Stub: Prototype#

There is no immediate need for a full-fledged HTTP daemon. A simple prototype which runs in the same context as syncevo-dbus-server would be enough. It could be written in a higher level scripting language (Python?) to test and demonstrate the D-Bus API.

Replace D-Bus#

Other IPC mechanisms are certainly possible. Patches which add them for other platforms (Windows? Mac OS X?) are welcome, as long as someone volunteers to maintain the code on those platforms.

SyncEvolution as Library#

libsyncevolution can be used in simple applications without the dependency on any kind of IPC mechanism. For SyncML clients this is often good enough. In fact, the traditional syncevolution command line tool is implemented this way, although it should be rewritten to also work as frontend to syncevo-dbus-server.

It wouldn’t be hard to extend libsyncevolution so that it also supports listening for incoming connection requests directly; libsoup supports this.

Synchronizing Multiple Peers#

SyncML is always peer-to-peer. To keep a group of peers in sync, the local machine needs to synchronize with each of them at least once and continue iterating until there are no pending local changes (the last peer in the first iteration might send changes which need to be forwarded to all of the peers synced with already).

Automatic Syncs#

EDS has the possibility to be notified of changes (with “views”). Some entity could be added to the system to create such a view and tell syncevo-dbus-server to run a sync (after a short delay, to batch changes).

Detecting changes on one of the peers is harder. Without some kind of notification by the peer (“push”) the local machine has to poll them at regular intervals.